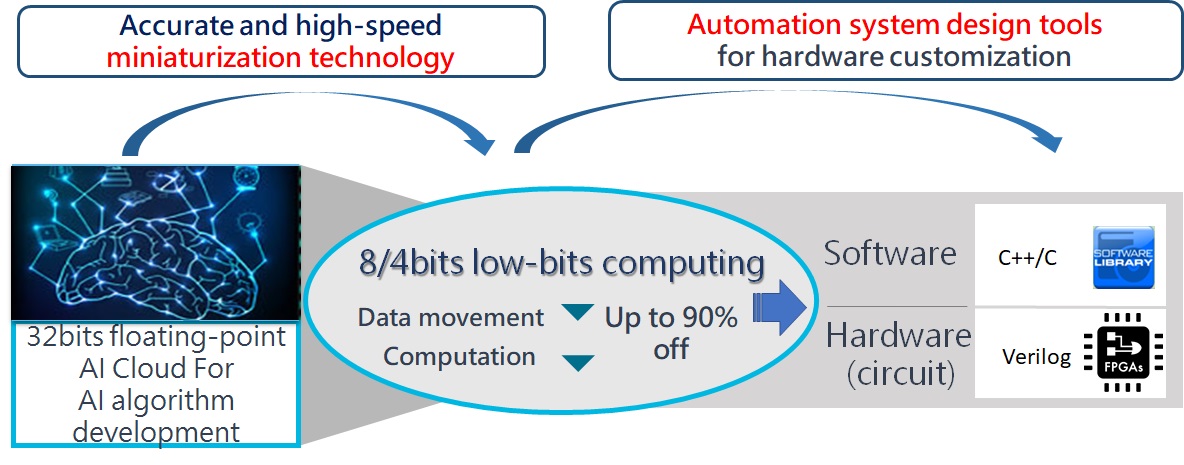

Recude memory footprint of edge AI system over TPU/FPGA/DSP/CPU platforms. Complex AI algorithms (more than 70 million hyperparameters) can run on the platforms

Make (8bits/4bits) low-bits computation of edge AI systems have the same quality of results as 32bits floating-point computation of cloud servers.

Edge AI units work with Cloud servers. Only few data need to be uploaded to the cloud for advance analysis. Much to reduce transmission risk and costs. Process data in real-time.

Provide a flexible, reconfigurable architecture hardware platform. Multiple AI algorithms can run in parallel.